Pitch detection has evolved far beyond simple frequency analysis.

While traditional algorithms like FFT and YIN rely on mathematical pattern recognition, modern systems use machine learning (ML) to understand sound — not just measure it.

Machine learning has made pitch detection:

- More accurate in noisy conditions

- More stable for voice and polyphonic signals

- More adaptive to different instruments, timbres, and octaves

In this guide, we’ll explore how AI-based pitch detectors work, how they compare to traditional methods, and what role privacy and browser processing play in the future of pitch technology.

Traditional Pitch Detection — Quick Recap

Classic pitch detection relies on Digital Signal Processing (DSP) math to find repeating cycles in an audio waveform.

Common Classical Algorithms

| Algorithm | Core Idea | Strength | Weakness |

|---|---|---|---|

| FFT (Fast Fourier Transform) | Analyzes energy across frequencies | Fast and simple | Struggles with noise and overtones |

| Autocorrelation | Matches signal with time-shifted versions | Stable for clean tones | Slow and inaccurate in polyphony |

| YIN Algorithm | Optimized autocorrelation variant | Robust for vocals | Needs fine-tuning for accuracy |

| Cepstrum Analysis | Separates periodic and spectral components | Good for speech | Limited octave range |

These methods are lightweight and fast, but they make assumptions:

that your signal is clean, monophonic, and stable — something real voices and instruments rarely are.

The Rise of Machine Learning Pitch Detection

Machine learning takes a different approach.

Instead of searching mathematically for peaks, it learns to recognize patterns of pitch directly from labeled training data.

By feeding thousands of hours of recorded sounds — voices, instruments, environmental tones — into a neural network, the system learns to estimate the fundamental frequency (F₀) even when harmonics, noise, or distortion are present.

Popular ML-Based Models

| Model | Description | Accuracy Range | Platform |

|---|---|---|---|

| CREPE (2018) | Deep convolutional neural net trained on diverse audio datasets | ±5 cents | TensorFlow, Python |

| SPICE (2021) | Lightweight model optimized for mobile and browser use | ±10 cents | TensorFlow.js |

| pYIN | Probabilistic variant of YIN with ML-based smoothing | ±5–8 cents | Integrated in Sonic Visualiser |

| DeepF0 | CNN-based continuous frequency tracker | ±4 cents | Research-grade (C++) |

These systems can detect pitch in polyphonic music, crowded mixes, and even speech with background chatter — situations where classic DSP methods fail.

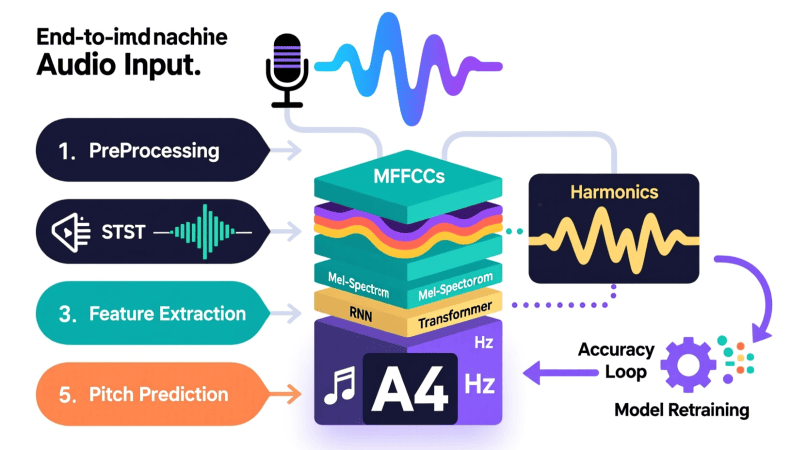

How Neural Pitch Detection Works

Machine learning pitch detectors use a two-phase process:

1. Training Phase

- Thousands of labeled audio samples (each with known F₀) are fed into a neural network.

- The model learns relationships between spectral shapes, harmonic spacing, and timbral features.

- Once trained, it can infer pitch even in unfamiliar sounds.

2. Inference Phase

- The model receives raw or pre-processed waveform data.

- It outputs an estimated F₀ (fundamental frequency) — sometimes along with a confidence score.

- Post-processing smooths and filters the pitch contour for readability.

Key Techniques Used

- Convolutional Neural Networks (CNNs) for pattern extraction.

- Recurrent Neural Networks (RNNs) or GRUs for temporal pitch tracking.

- Mel-scaled spectrograms as model input to mimic human hearing.

ML vs Classical Algorithms — Side-by-Side

| Feature | Classical (FFT/YIN) | Machine Learning (CREPE/SPICE) |

|---|---|---|

| Accuracy in Noise | Moderate | Excellent |

| Polyphonic Detection | Weak | Strong |

| Latency | Very low | Moderate |

| Hardware Requirements | Light | Heavier |

| Explainability | Fully transparent | Black-box |

| Local vs Cloud | Local | Often cloud or GPU-based |

| Ideal Use | Simple real-time detection | Advanced vocal/music analysis |

In short:

Traditional DSP is ideal for real-time browser tools,

while ML models shine in studio, AI, and research environments.

Example: CREPE in Action

CREPE (Convolutional Recurrent Estimator for Pitch Extraction) analyzes sound as an image — a spectrogram.

It divides audio into small “frames” and predicts the most likely F₀ for each one.

Input: Raw waveform → Convert to spectrogram

↓

Neural Network → Predict F₀ (Hz)

↓

Output: Continuous pitch contour with confidence score

CREPE achieves sub-5-cent precision even in noisy voice recordings — a milestone for both research and performance tracking.

Why PitchDetector.com Uses Local DSP (Not Cloud AI)

While ML offers top accuracy, it comes with trade-offs:

- Requires high CPU/GPU power

- Often sends audio to remote servers for inference

- Introduces latency and privacy concerns

At PitchDetector.com, we focus on on-device analysis — using proven mathematical methods (FFT, YIN, Autocorrelation) that:

- Run instantly in your browser

- Require no internet upload

- Deliver stable, reproducible results

This ensures complete privacy, speed, and reliability for musicians and educators.

Learn more: Data Security Hub

Hybrid Future — The Best of Both Worlds

The future of pitch detection lies in hybrid models — combining the efficiency of DSP with the accuracy of ML.

- DSP finds candidate frequencies (fast pre-screening).

- ML refines the results for complex or noisy signals.

- Edge AI chips and TensorFlow.js will allow real-time neural pitch detection in browsers soon.

When that happens, expect near-perfect pitch analysis for every sound, right in your web browser — no cloud required.

Learn More

- Pitch Tracking vs Pitch Detection

- How FFT Works in Pitch Detection

- Real-Time Browser Pitch Detection Explained

- Accuracy Tests

- Web Audio API Pitch Detection

FAQ — Machine Learning in Pitch Detection

Q1: Is machine learning more accurate than FFT or YIN?

Yes — in noisy or complex signals. ML models like CREPE outperform classic DSP by learning harmonic patterns, not just counting frequencies.

Q2: Can I use neural pitch detection in the browser?

Yes, through lightweight TensorFlow.js models like SPICE, though with slightly higher CPU usage.

Q3: Why doesn’t every online tuner use ML yet?

Because ML requires heavier computation and often needs external models, which can compromise real-time speed or privacy.

Modern systems benefit from data-driven models explained in the science of pitch perception to better mimic human hearing.

To understand tuning baselines, a quick read on the A440 tuning standard adds useful context.

When background noise interferes, the guide on noise and background interference shows how algorithms stay reliable.

Developers often refine outputs using tips from accuracy calibration to keep predictions tight.

If you’re converting outputs, the overview of a note frequency converter explains the translation step.

File-based workflows improve with insights from how audio file pitch detection works behind the scenes.

For broader context, the primer on what a pitch detector is helps frame how ML fits into the toolchain.

PitchDetector.com is a project by Ornella, blending audio engineering and web technology to deliver precise, real-time pitch detection through your browser. Designed for musicians, producers, and learners who want fast, accurate tuning without installing any software.